SKEDSOFT

Agent architectures: We will next discuss various agent architectures.

Table based agent: In table based agent the action is looked up from a table based on information about the agent’s percepts. A table is simple way to specify a mapping from percepts to actions. The mapping is implicitly defined by a program. The mapping may be implemented by a rule based system, by a neural network or by a procedure. There are several disadvantages to a table based system. The tables may become very large. Learning a table may take a very long time, especially if the table is large. Such systems usually have little autonomy, as all actions are pre-determined.

Percept based agent or reflex agentIn percept based agents,

- information comes from sensors - percepts

- changes the agents current state of the world

- triggers actions through the effectors

Such agents are called reactive agents or stimulus-response agents. Reactive agents have no notion of history. The current state is as the sensors see it right now. The action is based on the current percepts only. The following are some of the characteristics of percept-based agents.

- Efficient

- No internal representation for reasoning, inference.

- No strategic planning, learning.

- Percept-based agents are not good for multiple, opposing, goals.

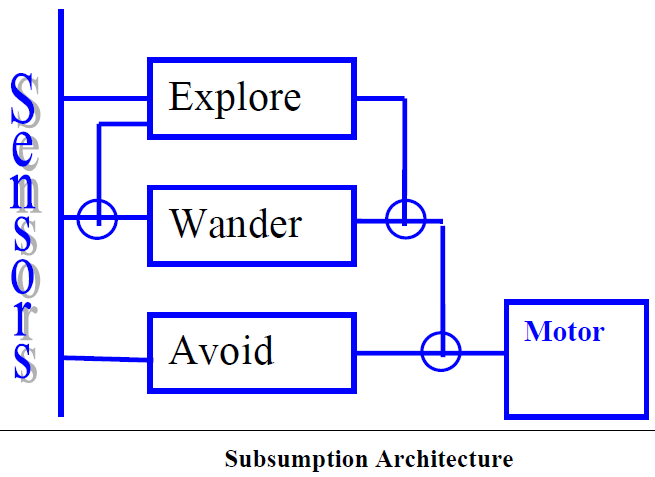

Subsumption Architecture: We will now briefly describe the subsumption architecture (Rodney Brooks, 1986). This architecture is based on reactive systems. Brooks notes that in lower animals there is no deliberation and the actions are based on sensory inputs. But even lower animals are capable of many complex tasks. His argument is to follow the evolutionary path and build simple agents for complex worlds. The main features of Brooks’ architecture are.

- There is no explicit knowledge representation

- Behaviour is distributed, not centralized

- Response to stimuli is reflexive

- The design is bottom up, and complex behaviours are fashioned from the combination of simpler underlying ones.

- Individual agents are simple

The Subsumption Architecture built in layers. There are different layers of behaviour. The higher layers can override lower layers. Each activity is modeled by a finite state machine. The subsumption architecture can be illustrated by Brooks’ Mobile Robot example.

The system is built in three layers.

1. Layer 0: Avoid Obstacles

2. Layer1: Wander behaviour

3. Layer 2: Exploration behaviour

Layer 0 (Avoid Obstacles) has the following capabilities:

• Sonar: generate sonar scan

• Collide: send HALT message to forward

• Feel force: signal sent to run-away, turn

Layer1 (Wander behaviour)

• Generates a random heading

• Avoid reads repulsive force, generates new heading, feeds to turn and forward

Layer2 (Exploration behaviour)

• Whenlook notices idle time and looks for an interesting place.

• Pathplan sends new direction to avoid.

• Integrate monitors path and sends them to the path plan.

State-based Agent or model-based reflex agent: State based agents differ from percept based agents in that such agents maintain some sort of state based on the percept sequence received so far. The state is updated regularly based on what the agent senses, and the agent’s actions. Keeping track of the state requires that the agent has knowledge about how the world evolves, and how the agent’s actions affect the world. Thus a state based agent works as follows:

• information comes from sensors - percepts

• based on this, the agent changes the current state of the world

• based on state of the world and knowledge (memory), it triggers actions through the effectors

Goal-based Agent: The goal based agent has some goal which forms a basis of its actions. Such agents work as follows:

• information comes from sensors - percepts

• changes the agents current state of the world

• based on state of the world and knowledge (memory) and goals/intentions, it chooses actions and does them through the effectors.

Goal formulation based on the current situation is a way of solving many problems and search is a universal problem solving mechanism in AI. The sequence of steps required to solve a problem is not known a priori and must be determined by a systematic exploration of the alternatives.

Utility-based Agent: Utility based agents provides a more general agent framework. In case that the agent has multiple goals, this framework can accommodate different preferences for the different goals. Such systems are characterized by a utility function that maps a state or a sequence of states to a real valued utility. The agent acts so as to maximize expected utility.

Learning Agent: Learning allows an agent to operate in initially unknown environments. The learning element modifies the performance element. Learning is required for true autonomy.