SKEDSOFT

Introduction: Named after the Alan Mathison Turing - An english computer scientist and mathematician.

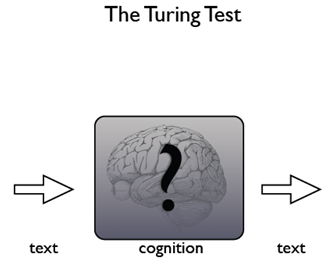

Turing test: Abandoning the philosophical question of what it means for an artificial entity to think or have intelligence, Alan Turing developed an empirical test of artificial intelligence, which is more appropriate to the computer scientist endeavoring to implement artificial intelligence on a computer. The Turing test is an operational test; that is, it provides a concrete way to determine whether the entity is intelligent. The test involves a human interrogator who is in one room, another human being in second room, and an artificial entity in a third room. The interrogator is allowed to communicate with both the other human and the artificial entity only with a textual device such as a terminal. The interrogator is asked to distinguish the other human from the artificial entity based on answers to questions posed by the interrogator. If the interrogator cannot do this, the Turing test is passed and we say that the artificial entity is intelligent.

- Note that the Turing test avoids physical interaction between the interrogator and the artificial entity; the assumption is that physical interaction is not necessary to intelligence. For example, HAL in the movie Space Odyssey is simply an entity with which the crew communicates, and HAL would pass the Turing test. If the interrogator is provided with visual information about the artificial entity so that the interrogator can test the entity’s ability to perceive and navigate in the world, we call the test the total Turing test. The Terminator in the movie of the same name would pass this test.

- Searle took exception to the Turing test with his Chinese room thought experiment. The experiment proceeds as follows. Suppose that we have successfully developed a computer program that appears to understand Chinese. That is, the program takes sentences written with Chinese characters as input, processes the characters, and outputs sentences written using Chinese characters. If it is able to convince a Chinese interrogator that it is a human, then the Turing test would be passed.

- Searle asks “does the program literally understand Chinese or is it only simulating the ability to understand Chinese?” To address this question, Searle proposes that he could sit in a closed room holding a book with an English version of the program, and adequate paper and pencils to carry out the instructions of the program by hand. The Chinese interrogator could then provide

- Chinese sentences through a slot in the door, Searle could process them using the program’s instructions, and send Chinese sentences back through the same slot. See Figure 1.1. Searle says that he has performed the exact same task as the computer that passed the Turing test. That is, each is following a program that simulates intelligent behavior. However, Searle notes that he does not speak Chinese. Therefore, since he does not understand Chinese, the reasonable conclusion is that the computer does not understand Chinese either.

- Searle argues that if the computer does not understand the conversation, then it is not thinking, and therefore it does not have an intelligent mind. Searle formulated the philosophical position known as strong AI, which is as follows:

- The appropriately programmed computer really is a mind, in the sense that computers given the right programs can be literally said to understand and have other cognitive states.

- Based on his Chinese room experiment, Searle concludes that strong AI is not possible. He states that “I can have any formal program you like, but I still understand nothing.” Searle’s paper resulted in a great deal of controversy and discussion for some time to come.

- The position that computers could appear and behave intelligently, but not necessarily understand, is called weak AI. The essence of the matter is whether a computer could actually have a mind (strong AI) or could only simulate a mind (weak AI). This distinction is perhaps of greater concern to the philosopher who is discussing the notion of consciousness [Chalmers, 1996]. Perhaps facetiously, a philosopher could even argue that emergentism might take place in the Chinese room experiment, and a mind might arise from Searle performing all his manipulations. Practically speaking, none of this is of concern to the computer scientist. If the program for all purposes behaves as if it is intelligent, computer scientists have achieved their goal.