SKEDSOFT

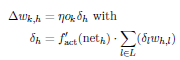

The same applies for the first factor according to the definition of δ:

![]() -----------(8)

-----------(8)

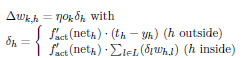

Now we insert:

![]() ----------(9)

----------(9)

The wanted change in weight Δwk,h

-----------(10)

-----------(10)

of course only in case of h being an inner neuron (otherwise there would not be a subsequent layer L).

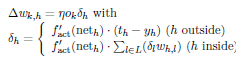

The result is the generalization of the delta rule, called backpropagation of error:

------(11)

------(11)

The final formula for backpropagation

It is obvious that backpropagation initially processes the last weight layer directly by means of the teaching input and then works backwards from layer to layer while considering each preceding change in weights. Thus, the teaching input leavestraces in all weight layers.