SKEDSOFT

Introduction:-One of the most used neural network models, used mainly for vector quantization and data analysis but also applicable to almost all the tasks where neural networks have been tried successfully, is the self organizing map introduced and developed by Teuvo Kohonen (1982-1993).

The Philosophy of the Kohonen Network

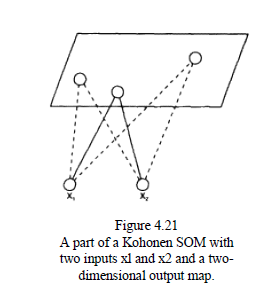

Self-organizing topological or feature maps became popular because they tend to be very powerful at solving problems based on vector quantization. A SOM consists of two layers, an input layer and an output layer, called a feature map, which represent the output vectors of the output .The weights of the connections of an output neuron j to all the n input neurons, form a vector wj in an n dimensional space.

The input values may be continuous or discrete, but the output values are binary only. The main ideas of SOM are as follows:-

The input values may be continuous or discrete, but the output values are binary only. The main ideas of SOM are as follows:-

Output neurons specialize during the training or recall procedure to react to input vectors of some groups (clusters) and to represent typical features shared by the input vectors. This characteristic of the SOM tends to be biologically plausible as there is evidence to show that the brain is organized into regions that correspond to different sensory stimuli. There is also evidence for linguistic units being locatable within the human brain. A SOM is able to extract abstract information from multidimensional primary signals and to represent it as a location, in one-, two-, three-, etc. dimensional space.

The neurons in the output layer are competitive. Lateral interaction between neighboring neurons is introduced in such a way that a neuron has a strong excitatory connection to itself and fewer excitatory connections to its neighboring neurons within a certain radius; beyond this area, a neuron

either inhibits the activation of the other neurons by inhibitory connections, or does not influence it. One possible rule is the so-called Mexican hat rule. In general, this is "the winner-takes-all" scheme, where only one neuron is the winner after an input vector has been fed in and a competition between the output neurons has taken place. The winning neuron represents the class, the label, and the feature to which the input vector belongs.

The SOM transforms or preserves the similarity between input vectors from the input space into topological closeness of neurons in the output space represented as a topological map. Similar input vectors are represented by near points (neurons) in the output space. The distance between the neurons in the output layer matters, as this is a significant property of the network.

There are two possibilities for using the SOM. The first is to use it for the unsupervised mode only, where the labels and classes that the input vectors belong to are unknown. A second option is when SOM is used for unsupervised training followed by a supervised training. LVQ (learning vector quantization) algorithms (Kohonen 1990) have been developed for this purpose. In the LVQ algorithms, after an initial unsupervised learning is performed in a SOM, the output neurons are calibrated, labelled for the known classes, and a supervised learning is performed which refines the map according to what is known about the output classes, regions, groups, and labels.