SKEDSOFT

Introduction:-The neurons are grouped in the following layers: One input layer, n hidden processing layers and one outputlayer. In a feedforward network each neuron in one layer has only directed connections to the neurons of the next layer.

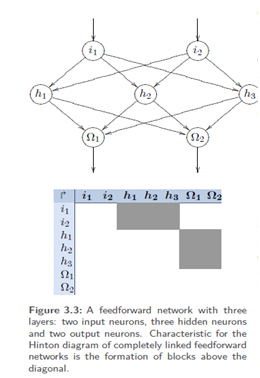

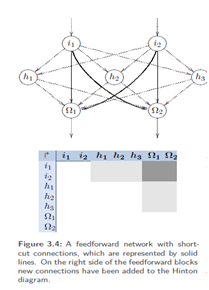

Feedforward network:-The neuron layers of a feedforward network are clearly separated: One input layer, one output layer and one or more processing layers which are invisible from the outside (also called hidden layers). Connections are only permitted to neurons of the following layer.Some feedforward networks permit the called shortcut connections.

Recurrent networks have influence on themselves:-Recurrenceis defined as the process of aneuron influencing itself by any means orby any connection. Recurrent networks donot always have explicitly defined input oroutput neurons.

Direct recurrences start and end at the same neuron:-Some networks allow for neurons to beconnected to themselves, which is calleddirect recurrence or sometimes self-recurrence. As a result, neurons inhibit and thereforestrengthen themselves in order to reachtheir activation limits.

Direct recurrence:-Now we expand the feedforward network by connecting a neuron j to itself, with theweights of these connections being referred to as wj,j.In other words: the diagonal of the weight matrix W may be different from 0.

Indirect recurrences can influence their starting neuron only by making detours:-If connections are allowed towards the inputlayer, they will be called indirect recurrences. Then a neuron j can use indirectforwards connections to influence itself,for example, by influencing the neuronsof the next layer and the neurons ofthis next layer influencing j.

Indirect recurrence:- Again our network is based on a feedforward network, now with additional connections between neurons and their preceding layer being allowed. Therefore, below the diagonal of W is different from 0.

Connections between neurons within one layer are called lateral recurrences. Eachneuron often inhibits the other neurons ofthe layer and strengthens itself. As a resultonly the strongest neuron becomes active(winner-takes-all scheme).

Lateral recurrence:-A laterally recurrent network permits connections within one layer.

Completely linked:-networks permit connections between all neurons, except for direct recurrence.