SKEDSOFT

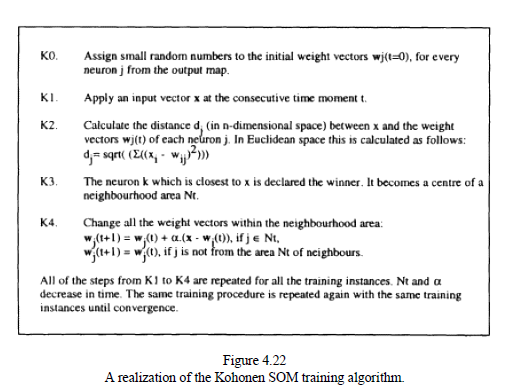

The unsupervised algorithm for training a SOM, proposed by Teuvo Kohonen, is outlined in figure 4.22.After each input pattern is presented, the winner is found and the connection weights in its neighborhood area Nt increase, while the connection weights outside the area are kept as they are. a is a learning parameter. It is recommended that the training time moments t (cycles) are more than 500 times the output neurons. If the training set contains fewer instances than this number, then the whole training set is fed again and again into the network.

SOMs learn statistical features. The synaptic weight vectors tend to approximate the density function of the input vectors in an orderly fashion (Kohonen 1990). Synaptic vectors wjconverge exponentially to centers of groups of patterns and the whole map represents to a certain degree the probability distribution of the input data. This property of the SOM is illustrated by a simple example of a two input, 20 x 20-output SOM. The input vectors are generated randomly with a uniform probability distribution function as points in a two-dimensional plane, having values in the interval [0,1].

Figure 4.23 represents graphically the change in weights after some learning cycles. The first box is a two-dimensional representation of the data. The other boxes represent SOM weights also in a two-dimensional space. The lines connect neighboring neurons, and the position of a neuron in the two-dimensional graph represents the value of its weight vector. Gradually the output neurons become self organized and represent a uniform distribution of input vectors in the input space. The time (in cycles) is given in each box as well. If the input data have a well-defined probability density function, then theweight vectors of the output neurons try to imitate it, regardless of how complex it is.

The weight vectors are also called reference vectors or reference codebook vectors, and the whole weightvector space is called a reference codebook. The adaptation of the same network to the generated input vectors in 2 when the latter are not uniformly distributed but have a Gaussian distribution of a star type.

The SOM is very similar to an elastic net which covers the input vector's space.