SKEDSOFT

Algorithm to Find a Maximally-Specific Hypothesis: Algorithm to search the space of conjunctions:

- Start with the most specific hypothesis

- Generalize the hypothesis when it fails to cover a positive example

Algorithm:

- Initialize h to the most specific hypothesis

- For each positive training example X

For each value a in h

If example X and h agree on a, do nothing

Else generalize a by the next more general constraint

3. Output hypothesis h

Example: Let’s run the learning algorithm above with the following examples:

((red,small,round,humid,low,smooth), poisonous)

((red,small,elongated,humid,low,smooth), poisonous)

((gray,large,elongated,humid,low,rough), not-poisonous)

((red,small,elongated,humid,high,rough), poisonous)

We start with the most specific hypothesis: h = (0,0,0,0,0,0)

The first example comes and since the example is positive and h fails to cover it, we simply generalize h to cover exactly this example:

h = (red,small,round,humid,low,smooth)

Hypothesis h basically says that the first example is the only positive example, all other examples are negative.

Then comes examples 2: ((red,small,elongated,humid,low,smooth), poisonous)

This example is positive. All attributes match hypothesis h except for attribute shape: it has the value elongated, not round. We generalize this attribute using symbol ? yielding:

h: (red,small,?,humid,low,smooth)

The third example is negative and so we just ignore it.

Why is it we don’t need to be concerned with negative examples?

Upon observing the 4th example, hypothesis h is generalized to the following:

h = (red,small,?,humid,?,?)

h is interpreted as any mushroom that is red, small and found on humid land should be classified as poisonous.

The algorithm is guaranteed to find the hypothesis that is most specific and consistent with the set of training examples. It takes advantage of the general-specific ordering to move on the corresponding lattice searching for the next most specific hypothesis.

Note that:

There are many hypotheses consistent with the training data D. Why should we prefer the most specific hypothesis?

What would happen if the examples are not consistent? What would happen if they have errors, noise?

What if there is a hypothesis space H where one can find more that one maximally specific hypothesis h? The search over the lattice must then be different to allow for this possibility.

- The algorithm that finds the maximally specific hypothesis is limited in that it only finds one of many hypotheses consistent with the training data.

- The Candidate Elimination Algorithm (CEA) finds ALL hypotheses consistent with the training data.

- CEA does that without explicitly enumerating all consistent hypotheses.

Applications:

- Chemical Mass Spectroscopy

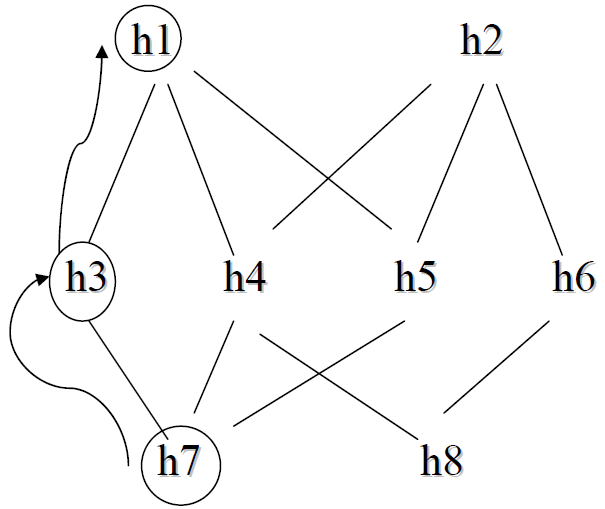

- Control Rules for Heuristic Search