SKEDSOFT

N queens : Goal: Put n chess-game queens on an n x n board, with no two queens on the same row, column, or diagonal.

Example:

Chess board reconfigurations: Here, goal state is initially unknown but is specified by constraints that it must satisfy Hill climbing (or gradient ascent/descent)

Iteratively maximize “value” of current state, by replacing it by successor state that has highest value, as long as possible.

Note: minimizing a “value” function v(n) is equivalent to maximizing –v(n), thus both notions are used interchangeably.

Hill climbing – example: Complete state formulation for 8 queens

Successor function: move a single queen to another square in the same column

Cost: number of pairs that are attacking each other.

Minimization problem

Hill climbing (or gradient ascent/descent)

• Iteratively maximize “value” of current state, by replacing it by successor state that has highest value, as long as possible.

Note: minimizing a “value” function v(n) is equivalent to maximizing –v(n), thus both notions are used interchangeably.

• Algorithm:

- determine successors of current state

- choose successor of maximum goodness (break ties randomly)

- if goodness of best successor is less than current state's goodness, stop

- otherwise make best successor the current state and go to step 1

• No search tree is maintained, only the current state.

• Like greedy search, but only states directly reachable from the current state are considered.

• Problems:

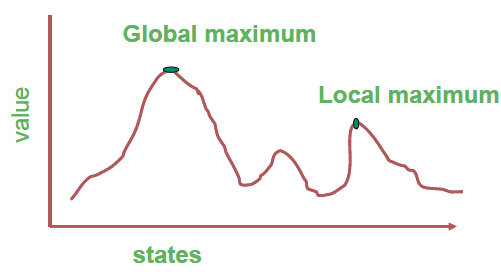

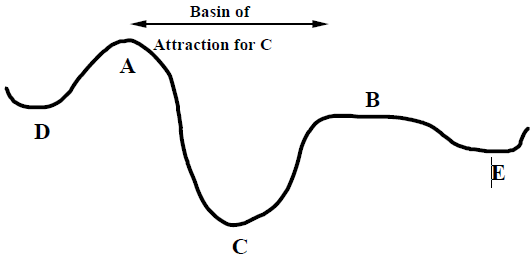

Local maxima: Once the top of a hill is reached the algorithm will halt since every possible step leads down.

Plateaux: If the landscape is flat, meaning many states have the same goodness, algorithm degenerates to a random walk.

Ridges: If the landscape contains ridges, local improvements may follow a zigzag path up the ridge, slowing down the search.

- Shape of state space landscape strongly influences the success of the search process. A very spiky surface which is flat in between the spikes will be very difficult to solve.

- Can be combined with nondeterministic search to recover from local maxima.

- Random-restart hill-climbing is a variant in which reaching a local maximum causes the current state to be saved and the search restarted from a random point. After several restarts, return the best state found. With enough restarts, this method will find the optimal solution.

- Gradient descent is an inverted version of hill-climbing in which better states are represented by lower cost values. Local minima cause problems instead of local maxima.

Hill climbing - example

• Complete state formulation for 8 queens

– Successor function: move a single queen to another square in the same column

– Cost: number of pairs that are attacking each other.

• Minimization problem

• Problem: depending on initial state, may get stuck in local extremum.

Minimizing energy

• Compare our state space to that of a physical system that is subject to natural interactions

• Compare our value function to the overall potential energy E of the system.

• On every updating, we have DE ≤ 0

Hence the dynamics of the system tend to move E toward a minimum. We stress that there may be different such states — they are local minima. Global minimization is not guaranteed.