SKEDSOFT

Introduction: This section discusses interval-scaled variables and their standardization. It then describes distance measures that are commonly used for computing the dissimilarity of objects described by such variables. These measures include the Euclidean, Manhattan, and Minkowski distances.

Interval-scaled variables are continuous measurements of a roughly linear scale. Typical examples include weight and height, latitude and longitude coordinates (e.g., when clustering houses), and weather temperature.

The measurement unit used can affect the clustering analysis. For example, changing measurement units from meters to inches for height, or from kilograms to pounds for weight, may lead to a very different clustering structure. In general, expressing a variable in smaller units will lead to a larger range for that variable, and thus a larger effect on the resulting clustering structure. To help avoid dependence on the choice of measurement units, the data should be standardized. Standardizing measurements attempts to give all variables an equal weight. This is particularly useful when given no prior knowledge of the data. However, in some applications, users may intentionally want to give more weight to a certain set of variables than to others. For example, when clustering basketball player candidates, we may prefer to give more weight to the variable height.

To standardize measurements, one choice is to convert the original measurements to unit less variables. Given measurements for a variable f, this can be performed as follows.

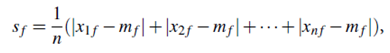

Calculate the mean absolute deviation, sf :

where x1 f , : : : , xn f are n measurements of f , and mf is the mean value of f , that is, mf = 1/n (x1 f x2 f xn f ).

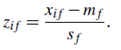

Calculate the standardized measurement, or z-score:

The mean absolute deviation, sf, is more robust to outliers than the standard deviation, sf .When computing the mean absolute deviation, the deviations from the mean (i.e., |xif - mf |) are not squared; hence, the effect of outliers is somewhat reduced. There are more robust measures of dispersion, such as the median absolute deviation. However, the advantage of using the mean absolute deviation is that the z-scores of outliers do not become too small; hence, the outliers remain detectable. Standardization may or may not be useful in a particular application. Thus the choice of whether and how to perform standardization should be left to the user

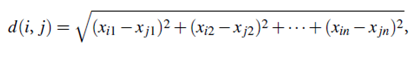

After standardization, or without standardization in certain applications, the dissimilarity (or similarity) between the objects described by interval-scaled variables is typically computed based on the distance between each pair of objects. The most popular distance measure is Euclidean distance, which is defined as

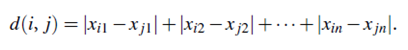

where i=(xi1, xi2, : : : , xin) and j =(x j1, x j2, : : : , x jn) are two n-dimensional data objects. Another well-known metric is Manhattan (or city block) distance, defined as

Both the Euclidean distance and Manhattan distance satisfy the following mathematic requirements of a distance function:

1. d(i, j) ≥ 0: Distance is a nonnegative number.

2. d(i, i) = 0: The distance of an object to itself is 0.

3. d(i, j) = d( j, i): Distance is a symmetric function.

4. d(i, j) ≤ d(i, h) d(h, j): Going directly from object i to object j in space is no more than making a detour over any other object h (triangular inequality).