SKEDSOFT

Introduction:-The manner in which the neurons of a neural network are structured is intimately linked with the learning algorithm used to train the network. We may therefore speak of learning algorithms (rules) used in the design of neural networks as being structured.

In general, we may identify three fundamentally different classes of network architectures:-

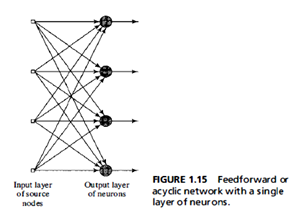

1.Single-Layer Feed forward Networks:-In a layered neural network the neurons are organized in the form of layers. In the simplestform of a layered network, we have an input layer of source nodes that projectsonto an output layer of neurons , but not vice versa, In other words,this network is strictly a feed forwardor acyclic type, thecase of four nodes in both the input and output layers. Such a network is called a single-layer network, with the designation "single-layer" referring to the output layer ofComputationnodes; the input layer of source nodes because no computation is performed there.

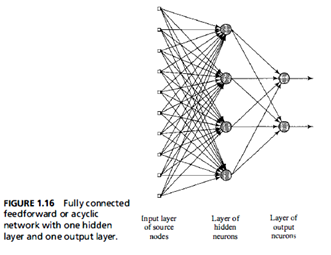

2.Multilayer Feed forward Networks:-The second class of a feed forward neural network distinguishes itself by the presenceof one or more hidden layers, whose computation nodes are correspondingly calledhidden neurons or hidden units, The function of hidden neurons is to intervene between the external input and the network output in some useful manner, By adding one or more hidden layers, the network is enabled to extract higher-order statistics. The ability of hidden neurons to extract higher-order statistics is particularly valuable when the size of the input layeris large.

The source nodes in the input layer of the network supply respective elements of the activation pattern, which constitute the input signals applied to the neurons in the second layer (i.e., the first hidden layer).The output signals of the second layer are used as inputs to the third layer, and so on for the rest of the network. Typically the neurons in each layer of the network have as their inputs the output signals of the preceding layer only. The set of output signals of the neurons in the output (final) layer of the network constitutes the overall response of the network to the activation pattern supplied by the source nodes in the input (first) layer.

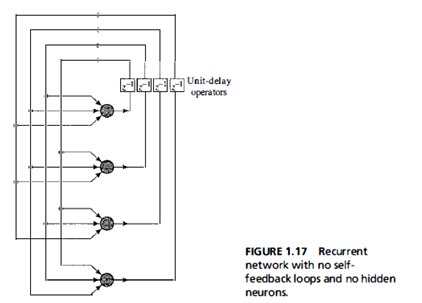

3.Recurrent Networks:-A recurrent neural network distinguishes itself from a feed forward neural network in that it has at least one feedback loop. For example, a recurrent network may consist of a single layer of neurons with each neuron feeding its output signal back to the inputs of all the other neurons. In the structure depicted in this figure there are no self-feedback loops in the network; self-feedback refers to a situation where the output of a neuron is feedback into its own input.

The presence of feedback loops, whether in the recurrent structure of Fig. 1.17 or that of Fig. 1.18, has a profound impact on the learning capability of the network and on its performance. Moreover, the feedback loops involve the use of particular branches composed of unit-delay elements (denoted by Z-l), which result in a nonlinear units.