SKEDSOFT

Introduction:-Generally, recurrent networks are networks that are capable of influencing themselves by means of recurrences, e.g. by including the network output in the following computation steps. There are many types of recurrent networks of nearly arbitrary form, and nearly all of them are referred to as recurrent neural networks.Recurrent multilayer perceptrons such a recurrent network is capable to compute more than the ordinary MLP: If the recurrent weights are set to 0, the recurrent network will be reduced to an ordinary MLP.

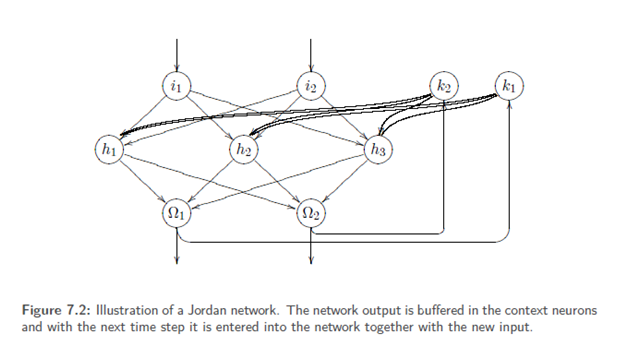

Jordan networks

A Jordan network is a multilayer perceptron with a set K of so-called context neurons k1, k2. . . k|K|.There is one context neuron per output neuron.A context neuron just memorizes an output until it can be processed in the next time step. Therefore, there are weighted connections between each output neuron and one context neuron. The stored values are returned to the actual network by means of complete links between the context neurons and the input layer.

In the original definition of a Jordan network the context neurons are also recurrent to themselves via a connecting weight. But most applications omit this recurrence since the Jordan network is already very dynamic and difficult to analyze, even without these additional recurrences.

A context neuron k receives the output value of another neuron i at a time t and then reenters it into the network at a time (t 1).A Jordannetwork is a multilayer perceptronwith one context neuron per output neuron. The set of context neurons is called K. The context neurons are completely linked toward the input layer of the network.