SKEDSOFT

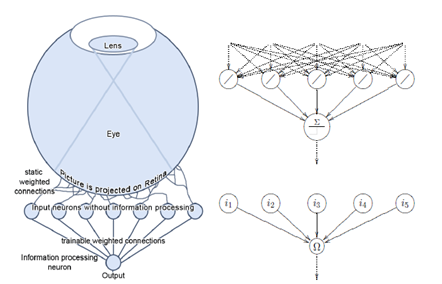

Introduction:-Perceptron, most of the timeis used to describe a feed forward networkwith shortcut connections. This network has a layer of scanner neurons with statically weighted connections; theweights of the layers are allowed to be changed.

All neurons subordinate to theretina are pattern detectors. Here we initiallyuse a binary perceptronwith everyoutput neuron having exactly two possibleoutput values (e.g. {0, 1} or {−1, 1}).Thus, a binary threshold function is usedas activation function, depending on the threshold value of the output neuron. In a way, the binary activation functionrepresents a query which can alsobe negated by means of negative weights. The perceptron can thus be used to accomplishtrue logical information processing, but it is not theeasiest way to achieve Boolean logic.

An input neuron is an identity neuron. Itexactly forwards the information received. Thus, it represents the identity function, which should be indicated by the symbol/. Therefore the input neuron is representedby the symbol

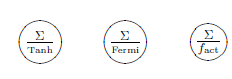

Information processing neurons somehow process the input information, i.e. do not represent the identityfunction. A binary neuron sums up allinputs by using the weighted sum as propagationfunction, which we want to illustrateby the signΣ. Then the activationfunction of the neuron is the binary thresholdfunction, which can be illustrated by. This leads us to the complete depiction of information processing neurons, namely. Other neurons that use the weighted sum as propagation function but the activation functions hyperbolic tangentor Fermi function, or with a separately defined activation function fact, are similarly represented by

Other neurons that use the weighted sum as propagation function but the activation functions hyperbolic tangentor Fermi function, or with a separately defined activation function fact, are similarly represented by

These neurons are also referred to as Fermi neurons or Tanh neuron.

These neurons are also referred to as Fermi neurons or Tanh neuron.

The perceptron isa feed forward network containing a retina that is used only for data acquisition and which has fixed-weighted connections withthe first neuron layer (input layer). Thefixed-weight layer is followed by at leastone trainable weight layer. One neuronlayer is completely linked with the followinglayer. The first layer of the perceptronconsists of the input neurons.As a matter of fact the first neuron layer is often understoodas input layer, because this layer only forwards the input values. The retina itself and the static weights behind it areno longermentioned or displayed, sincethey do not process information in anycase. So, the depiction of a perceptronstarts with the input neurons.