SKEDSOFT

The REFuNN algorithm first published in Kasabov (1993b) and further refined in Kasabov (1995c), is a simple connectionist method for extracting weighted fuzzy rules and simple fuzzy rules.

This method is based on training MLP architecture with fuzzified data. The REFuNN algorithm, outlined below, is based on the following principles:

1. Simple operations are used and a low computational cost is achieved.

2. Hidden nodes in an MLP can learn features, rules, and groups in the training data.

3. Fuzzy quantization of the input and the output variables is done in advance; the granularity of the fuzzy representation (the number of fuzzy labels used) defines in the end the "fineness" and quality of the extracted rules. Standard, uniformly distributed triangular membership functions can be used for both fuzzy input and fuzzy output labels.

4. Automatically extracted rules may need additional manipulation depending on the reasoning method applied afterward.

The REFuNN Algorithm

Step 1. Initialization of a FuNN:-This FuNN is a part of the fuzzy neural network architecture shown in figure 4.38. The functional parameters of the rule layer and the output fuzzy predicates layer can be set as follows: summation input function; sigmoid activation function; direct output function.

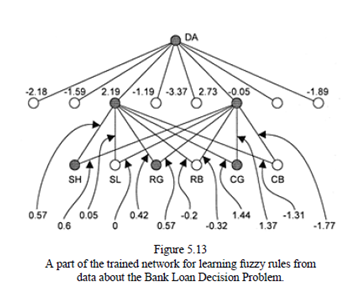

Step 2. Training the FuNN:-A supervised training algorithm is performed for training the network with fuzzified data until convergence. A back propagation training algorithm can be used. A part of the trained FuNN with data generated for the Bank Loan case example is shown in figure 5.13. Stepwise training and zeroing can also be applied.

Step 3. Extracting an initial set of weighted rules:-A set of rules {rj} is extracted from the trained network as follows. All the connections to an output neuron Bjthat contribute significantly to its possible activation (their values, after adding the bias connection weight if such is used, are over a defined threshold Thaare picked up and their corresponding hidden nodes Rj, which represent a combination of fuzzy input labels, are analyzed further on. Only condition element nodes that support activating the chosen hidden node Rj will be used in the antecedent part of a rule Rg. The weights of the connections between the condition-element neurons and the rule nodes are taken as initial relative degrees of importance of the antecedent fuzzypropositions. The weights of the connections between a rule node Rjand an output node Bjdefine theinitial value for the certainty degree CFj. The threshold Thc can be calculated by using the formula:

Where Netmax is the desired value for the net input to a rule neuron to fire the corresponding rule, and k is the number of the input variables.

Step 4. Extracting simple fuzzy rules from the set of weighted rules: The threshold Thcused in step 3 was defined such that all the condition elements in a rule should collectively trigger the activation of thisrule. This is analogous to an AND connective. The number of fuzzy predicates allowed to be represented

in the antecedent part of a rule is not more than the number of input variables.The initial set of weighted rules can be converted into a set of simple fuzzy rules by simply removing the weights from the condition elements. Some antecedent elements, however, can trigger the rules without support from the test of the condition elements, that is, their degrees of importance DIij = wij (connection weights) are higher than a chosen threshold, for example, ThOR =Netmax. Such condition elements form separate rules, which transformation is analogous to a decomposition of a rule with OR connectives into rules with AND connectives only.

Step 5. Aggregating the initial weighted rulesAll the initial weighted rules {ri1, ri2. . .}that have the same condition elements and the same consequent elements, subject only to different degrees of importance, are aggregated into one rule. The relative degrees of importance DIij are calculated for every condition element Aij of a rule Ri as a normalized sum of the initial degrees of importance of the corresponding antecedent elements in the initial rules rij..The following two rules R1 and R2 were obtained by aggregating the initial rules from figure 5.14:

R1: SH(1.2) AND RG(1) AND CG(2.5) →DA

R2: SL(1.1) AND RB(1) AND CB(1.8) → DD

An additional option in REFuNN is learning NOT connectives in the rules. In this case negative weights whose absolute values are above the set thresholds Thc and Tha are considered and the input labels corresponding to the connected nodes are included in the formed simple fuzzy rules with a NOT connective in front.